The is the next in a series of technical posts relating to EVPN – in particular PBB-EVPN (Provider backbone bridging, Ethernet VPN) and attempts to explain the basic setup, application and problems solved within a very large layer-2 environment. Readers new to EVPN may wish to start with my first post which gives examples of the most basic implementation of regular EVPN;

https://tgregory.org/2016/06/04/evpn-in-action-1/

Regular EVPN without a doubt is the future of MPLS based multi-point layer-2 VPN connectivity, it adds the highly scalable BGP based control-plane, that’s been used to good effect in Layer-3 VPNs for over a decade. It has much better mechanisms for handling BUM (broadcast unknown multicast) traffic and can properly do active-active layer-2 forwarding, and because EVPN PE’s all synchronise their ARP tables with one another – you can design large layer-2/layer-3 networks that stretch across numerous data centres or POPs, and move machines around at layer-2 or layer-3 without having to re-address or re-provision – you can learn how to do this here;

https://tgregory.org/2016/06/11/inter-vlan-routing-mobility/

But like any technology it can never be perfect from day one, EVPN contains more layer-2 and layer-3 functionality than just about any single protocol developed so far, but it comes at a cost – control-plane resources, consider the following scenario;

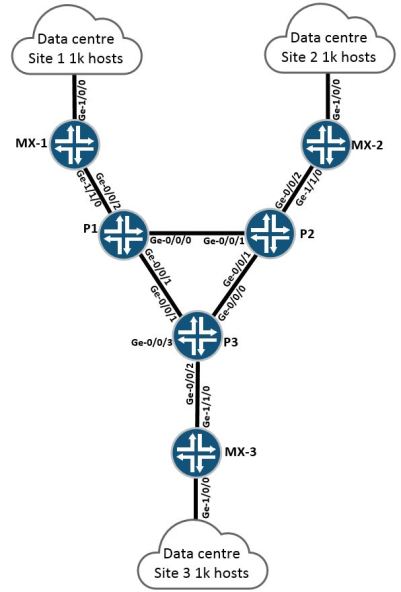

The above example is an extremely simple example of a network with 3x data centres, each data centre has 1k hosts sat behind it. The 3x “P” routers in the centre of the network are running ISIS and LDP only, each edge router (MX-1 through MX-3) is running basic EVPN with all hosts in a single VLAN.

A quick recap of the basic config (configs identical on all 3x PE routers, with the exception of IP addresses)

-

interfaces {

-

ge-1/0/0 {

-

flexible-vlan-tagging;

-

encapsulation flexible-ethernet-services;

-

unit 100 {

-

encapsulation vlan-bridge;

-

vlan-id 100;

-

}

-

}

-

routing-instances {

-

EVPN-100 {

-

instance-type virtual-switch;

-

route-distinguisher 1.1.1.1:100;

-

vrf-target target:100:100;

-

protocols {

-

evpn {

-

extended-vlan-list 100;

-

}

-

}

-

bridge-domains {

-

VL-100 {

-

vlan-id 100;

-

interface ge-1/0/0.100;

-

}

-

}

-

}

-

}

-

protocols {

-

bgp {

-

group fullmesh {

-

type internal;

-

local-address 10.10.10.1;

-

family evpn {

-

signaling;

-

}

-

neighbor 10.10.10.2;

-

neighbor 10.10.10.3;

-

}

-

}

This is only a small-scale setup using MX-5 routers but it’s easy to use this example in order to project the problem – that is, the EVPN control-plane is quite resource intensive.

With 1k hosts per site – that equates to 3k EVPN BGP routes that need to be advertised – which isn’t that bad, however if you’re a large service-provider spanning many data-centres across a whole country, or even multiple countries – 3k routes is a tiny amount, it may be the case that you have hundreds of thousands or millions of EVPN routes spread across hundreds or thousands of edge routers.

Having hundreds of thousands, or millions of routes is a problem that can normally be easily dealt with if things are IPv4 or IPv6, in that we can rely on summarising these routes down into blocks or aggregates in BGP – to make things much more sensible.

However in the layer-2 world, it’s not possible to summarise mac-addresses as they’re mostly completely random, in regular EVPN – if I have 1 million hosts, that’s going to equate to 1 million EVPN MAC routes which get advertised everywhere – which isn’t going to run very smoothly at all, once we start moving hosts around, or have any large-scale failures in the network that might require huge numbers of hosts to move from one place to another.

If I spin up the 3x 1k hosts in IXIA, spread across all 3x sites – we can clearly see the amount of EVPN control-plane state being generated and advertised across the network;

-

tim@MX5-1> show evpn instance extensive

-

Instance: EVPN-100

-

Route Distinguisher: 1.1.1.1:100

-

Per-instance MAC route label: 300640

-

MAC database status Local Remote

-

Total MAC addresses: 1000 2000

-

Default gateway MAC addresses: 0 0

-

Number of local interfaces: 1 (1 up)

-

Interface name ESI Mode Status

-

ge-1/0/0.100 00:00:00:00:00:00:00:00:00:00 single-homed Up

-

Number of IRB interfaces: 0 (0 up)

-

Number of bridge domains: 1

-

VLAN ID Intfs / up Mode MAC sync IM route label

-

100 1 1 Extended Enabled 300704

-

Number of neighbors: 2

-

10.10.10.2

-

Received routes

-

MAC address advertisement: 1000

-

MAC+IP address advertisement: 0

-

Inclusive multicast: 1

-

Ethernet auto-discovery: 0

-

10.10.10.3

-

Received routes

-

MAC address advertisement: 1000

-

MAC+IP address advertisement: 0

-

Inclusive multicast: 1

-

Ethernet auto-discovery: 0

-

Number of ethernet segments: 0

And obviously all of this information is injected into BGP – all of which needs to be advertised and distributed;

-

tim@MX5-1> show bgp summary

-

Groups: 1 Peers: 2 Down peers: 0

-

Table Tot Paths Act Paths Suppressed History Damp State Pending

-

bgp.evpn.0

-

2002 2002 0 0 0 0

-

Peer AS InPkt OutPkt OutQ Flaps Last Up/Dwn State|#Active/Received/Accepted/Damped…

-

10.10.10.2 100 1246 1410 0 1 5:51:04 Establ

-

bgp.evpn.0: 1001/1001/1001/0

-

EVPN-100.evpn.0: 1001/1001/1001/0

-

__default_evpn__.evpn.0: 0/0/0/0

-

10.10.10.3 100 1243 1393 0 0 5:50:51 Establ

-

bgp.evpn.0: 1001/1001/1001/0

-

EVPN-100.evpn.0: 1001/1001/1001/0

-

__default_evpn__.evpn.0: 0/0/0/0

-

tim@MX5-1>

With our 3k host setup, it’s obvious that things will be just fine – and I imagine a good 20-30k hosts in a well designed network running on routers with big CPUs and memory would probably be ok, however I suspect that in a large network already carrying the full BGP table, + L3VPNs and everything else, adding an additional 90-100k EVPN routes might not be such a good idea.

So what’s available for large-scale layer-2 networks?

Normally in large layer-2 networks, QinQ is enough to provide sufficient scale for most large-enterprises, with QinQ (802.1ad) we simply multiplex VLANs by adding a second VLAN service-tag (S-TAG) which allows us to represent many different customer tags, or C-TAGs – because the size of the dot1q header allows for 4096 different VLANs, if we add a second tag – that gives us 4096 x 4096 possible combinations which equals over 1.6 million;

As a quick recap on QinQ – in the below example, all frames from Customer 1 for Vlans 1-4096 are encapsulated with an S-TAG of 100, whilst all frames from Customer 2, for the same VLAN range of 1-4096 are encapsulated in S-TAG 200;

The problem with QinQ (PBN Provider-bridged-networks) is that it’s essentially limited to 1.6 Million possible combinations – which sounds like a lot, however if you’re a large service provider with tens of millions of consumers, businesses and big-data products – 1.6 Million isn’t very much.

Whether you run QinQ across a large switched enterprise network, or breakout individual high-bandwidth interfaces into a switch and sell hundreds of leased-line services in a multi-tenant design using VPLS – you’re still always going to be limited to 1.6 Million in total – and that’s before we mention things like active-active multi-homing which doesn’t work with VPLS.

Another disadvantage is that with QinQ, every device in the network is required to learn all the customer mac addresses – so it’s quite easy to see the scaling problems from the beginning.

For truly massive scale, Big-Data, DCI, provider-transport etc – we need to make the leap to PBB (provider backbone bridging) but what exactly is PBB?

Before we look at PBB-EVPN, we should first take some time to understand why basic PBB is and what problems it solves;

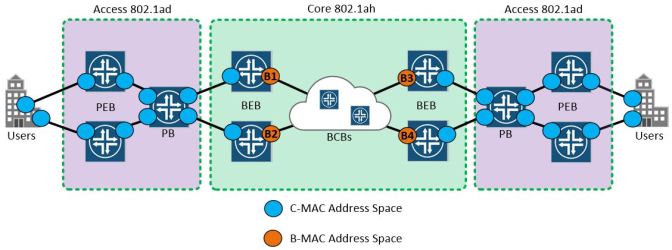

PBB was originally defined as 802.1ah or “mac in mac” and is layer-2 end to end, instead of merely adding another VLAN tag PBB, actually duplicates the mac layer of the customer frame and separates it from the provider domain, by encapsulating it in a new set of headers. This allows for complete transparency between the customer network and the provider network.

Instead of multiplexing VLANs, PBB uses a 24bit I-SID (service ID) the fact that it’s 24 bit gives us an immediate idea of the scale we’re talking about here – 16 million possible services. PBB also introduces the concept of B-TAG or Backbone tag – this essentially hides the customer source/destination mac addresses behind a backbone entity, removing the requirement for every device in the network to learn every single customer mac-address – analogous in some ways with IP address aggregation because of the reduction in network state.

Check the below diagram for a basic topology and list of basic PBB terms;

- PB = Provider bridge (802.1ad)

- PEB = Provider edge bridge (802.1ad)

- BEB = Backbone edge bridge (802.1ah)

- BCB = Backbone core bridge (802.1ah)

The “BEB” backbone-edge-bridge is the first immediate point of interest within PBB, essentially it forms the boundary between the access-network and the core network and introduces 2x new concepts;

- I-Component

- B-Component

The I-Component essentially forms the customer or access facing interface or routing instance, the B-Component is the backbone facing PBB core instance – the B-Component uses B-MAC addressing (backbone MAC) in order to forward customer frames to the core based on the new imposed B-MAC, instead of any of the original S or C VLAN tags and C-MAC (customer-MAC) which would have been the case in a regular PBN QinQ setup.

In this case – the “BEB” or Backbone edge bridge forms the connectivity between the access and core, where on one side it maintains a full layer-2 state with the access-network, however on the other side – it operates only in B-MAC forwarding, where enormous services and huge numbers of C-MAC (customer MACs) on the access-side can be represented by individual B-MAC addresses on the core side. this obviously drastically reduces the amount of control-plane processing – especially in the core on the “BCB” Backbone core bridges – where forwarding is performed using B-MACs only.

In terms of C-MAC and B-MAC it makes it easier if you break the network up into two distinct sets of space, ideally “C-MAC space” and “B-MAC space”

It’s pretty easy to talk about C-MAC or customer mac addressing as that’s something we’ve all been dealing with since we sent our first ping packet – however B-MACs are a new concept.

Within the PBB network, each “BEB” Backbone-edge-bridge has one or more B-MAC identifiers which are unique on the entire network, can be assigned automatically or statically by design;

The interesting part starts when we begin looking at the packet flow from one side of the network to the other – in this case we’ll use the above diagram to send a packet from left to right – note how the composition of the frame changes at each section of the network, including the new PBB encapsulated frame;

If we look at the packet flow from left to right – a series of interesting things happen;

- The user on the left hand side, sends a regular single-tagged frame with a destination MAC address of “C2” on the far right hand side of the network.

- The ingress PEB (provider edge bridge) is performing regular QinQ, where it pushes a new “S-VLAN” onto the frame to create a brand new QinQ frame

- The double-tagged packet traverses the network, where it lands on the first BEB router – here the frame is received and the BEB generates a unique I-SID based on the S-VLAN and the B-MAC

- The BEB encapsulates the original frame with it’s C-VLAN and S-VLAN intact, and adds the new PBB encapsulation, complete with the PBB Source and destination B-MACs (B4 and B2) and I-SID – and forwards it into the core of the network

- The BCBs in the core forward frame based only on the source and destination B-MAC and take no notice of the internal original frame information

- The egress BEB strips the PBB header away and forwards the original QinQ frame onto the access network,

- Eventually the egress PEB switch pops the S-VLAN and forwards the original frame with the destination mac of C2, to the user interface.

So that’s vanilla PBB in a nutshell – essentially, it’s a way of hiding the gigantic amount of customer mac-addresses behind a drastically smaller number of backbone mac-addresses, without the devices in the core having to learn and process all of the individual customer state. Combined with a new I-SID service identifier we can create an encapsulation that allows for a huge number of services.

But like most things – it’s not perfect.

In 2016 (and for literally the last decade) most modern networks have a simple MPLS core comprising of PE and P routers, when it comes to PBB – we need the devices in the core to act as switches (the BCB backbone-core-bridge), performing forwarding decisions based on B-MAC addresses, which is obviously incompatible with a modern MPLS network where we’re switching packets between edge loopback addresses, using MPLS labels and recursion.

So the obvious question is – can we replace the BCB element in the middle with MPLS – whilst stealing the huge service scaling properties of the BEB PBB edge?

The answer is yes! by combining PBB with EVPN – we can replace the BCB element of the core and signal the “B-Component” using EVPN BGP signalling and encapsulate the whole thing inside MPLS using PE and P routers so that the PBB-EVPN architecture now reflects something we’re all a little more used to;

We now have the vast scale of PBB – combined with the simplicity and elegance of a traditional basic MPLS core network where the amount of network-wide state information has been drastically reduced, as opposed to regular PBB which is layer-2 over layer-2 we’ve moved to a model which is much more like layer-2 over layer-2 over layer-3.

The next question is – how do we configure it ? whilst PBB-EVPN simplifies the control-plane across the core and allows huge numbers of layer-2 services to transit the network in a much more simple manner – it is a little more complex to configure on Juniper MX series routers, but we’ll go through it step by step 🙂

Before we look at the configuration – it’s easier to understand if we visualise what’s happening inside the router itself, by breaking the whole thing up into blocks;

Basically, we break the router up into several blocks – in Juniper both the customer facing I-Component and backbone facing B-Component are configured as two separate routing-instances, with each routing-instance containing a bridge domain. Each bridge-group is different – the I-Component bridge-domain (BR-I-100) contains the physical tagged interface facing the customer including some service-options and the service-type which is “ELAN” a multi-point MEF carrier Ethernet standard, and the I-SID that we’re going to use to identify the service – in this case “100100” for VLAN 100.

The B-Component also contains a bridge-domain “BR-B-100100” which forms the backbone facing bridge where the B-MAC is sourced from, it also defines the EVPN PBB options used to signal the core.

These routing-instances are connected together by a pair of special interfaces;

- PIP – Provider instance port

- CBP – Customer backbone port

These interfaces join the I-Component and B-Component routing-instances together and are a bit like logical psuedo-interfaces normally found inside Juniper routers, used to connect certain logical elements together.

Lets take a look at the configuration of the routing-instances on Juniper MX-

- Note, PBB-EVPN seems to have been supported only in very recent versions of Junos, these devices are MX-5 routers running Junos 16.1R2.11

- All physical connectivity is done on TRIO via a “MIC-3D-20GE-SFP” card

-

PBB-EVPN-B-COMP {

-

instance-type virtual-switch;

-

interface cbp0.1000;

-

route-distinguisher 1.1.1.1:100;

-

vrf-target target:100:100;

-

protocols {

-

evpn {

-

control-word;

-

pbb-evpn-core;

-

extended-isid-list 100100;

-

}

-

}

-

bridge-domains {

-

BR-B-100100 {

-

vlan-id 999;

-

isid-list 100100;

-

vlan-id-scope-local;

-

}

-

}

-

}

-

PBB-EVPN-I-COMP {

-

instance-type virtual-switch;

-

interface pip0.1000;

-

bridge-domains {

-

BR-I-100 {

-

vlan-id 100;

-

interface ge-1/0/0.100;

-

}

-

}

-

pbb-options {

-

peer-instance PBB-EVPN-B-COMP;

-

}

-

service-groups {

-

CUST1 {

-

service-type elan;

-

pbb-service-options {

-

isid 100100 vlan-id-list 100;

-

}

-

}

-

}

-

}

If we look at the configuration line by line, it works out as follows – for the B-Component

- Lines 2,4 and 5 represent normal EVPN route-distribution properties, (RD/RT etc)

- Line 3 brings the customer backbone port into the B-Component routing-instance – this logically links the B-Component to the I-Component

- Lines 8 and 9 specify the control-word and switch on the PBB-EVPN-CORE feature

- Line 10 allows only a I-Component service with an I-SID of 100100 to be processed by the B-Component

- Lines 13-17 are bridge-domain options

- Line 15 references VLAN-999 – this is currently unused but needs to be configured any value can be added here

- Line 16 species the I-SID mapping

For the I-Component;

- Line 23 adds the PIP (provider instance port) to the I-Component routing-instance

- Lines 24 -29 are standard bridge-domain settings which add the physical customer facing interface (ge-1/0/0.100) for VLAN-ID 100, to the bridge-domain and routing-instance

- Lines 30 and 31 activate the PBB service – and reference the “PBB-EVPN-B-COMP” routing-instance as the peer-instance for the service, this is how the I-Component is linked to the B-Component

- Lines 33-37 reference the service group, in this case “CUST1” with the service-type set as ELAN (the MEF standard for multipoint layer-2 connectivity) the I-Component I-SID for this service for VLAN-100 is 100100 as defined on line 37

Lets examine the PIP and CBP interfaces;

-

interfaces {

-

ge-1/0/0 {

-

flexible-vlan-tagging;

-

encapsulation flexible-ethernet-services;

-

unit 100 {

-

encapsulation vlan-bridge;

-

vlan-id 100;

-

}

-

}

-

cbp0 {

-

unit 1000 {

-

family bridge {

-

interface-mode trunk;

-

bridge-domain-type bvlan;

-

isid-list all;

-

}

-

}

-

}

-

pip0 {

-

unit 1000 {

-

family bridge {

-

interface-mode trunk;

-

bridge-domain-type svlan;

-

isid-list all-service-groups;

-

}

-

}

-

}

-

}

- Lines 2 through 9 represent a standard gigabit Ethernet interface configured with vlan-bridge encapsulation for VLAN 100 – standard stuff we’re all used to seeing;

- Lines 10 15 represent the CBP interface (customer backbone port) for unit 1000 where the bridge-domain-type is set to bvlan (backbone vlan) and accept any I-SID, this connects the B-Component to the I-Component

- Lines 19 through 24 represent the PIP (provider instance port) for the same unit 1000, as an svlan bridge – using an I-SID list for any service-group

- The PIP0 interface, connects the I-Component to the B-Component

A lot to remember so far! – another point worth mentioning is that PBB-EVPN doesn’t work unless you have the router set for “enhanced-ip mode”;

-

chassis {

-

network-services enhanced-ip;

-

}

And – like our regular EVPN configuration from previous blog posts – we just have basic EVPN signalling turned on inside BGP;

-

protocols {

-

bgp {

-

group mesh {

-

type internal;

-

local-address 10.10.10.1;

-

family evpn {

-

signaling;

-

}

-

neighbor 10.10.10.2;

-

neighbor 10.10.10.3;

-

}

-

}

The fact that we have basic BGP EVPN signalling turned on, is a real advantage – as it keeps the service inline with modern MPLS based offerings – where we have a simple LDP/IGP core with all the edge services (L3VPN, Multicast, IPv4, IPv6, L2VPN) controlled by a single protocol – BGP which we all know and love.

So I have this configuration running on 3x MX5 routers – the configurations from the above snippets are identical across all 3x MX5 routers, with the obvious exception of IP addresses – lets recap the diagram;

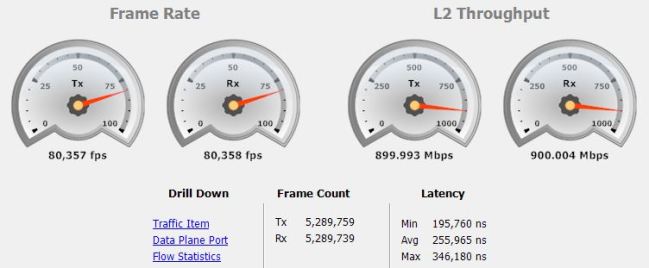

With the configuration applied – I’ll go ahead and spawn 3000 hosts using IXIA, each host is an emulated machine sat on the same layer-2 /16 subnet with VLAN-100 spanned across all three sites in the PBB-EVPN – basically just imagine MX-1 MX-2 and MX-3 as switches with 1000 laptops plugged into each one 🙂 to keep things simple – I’m only going to use single-tagged frames directly from IXIA and send a full-mesh of traffic to all sites, with each stream being 300Mbps, with 3x sites – that’s 900Mbps of traffic in total;

Traffic appears to be successfully forwarded end to end without delay – lets check some of the show commands on MX-1;

We can clearly see 3000 hosts inside the bridge-mac table on MX5-1;

-

tim@MX5-1> show bridge mac-table count

-

3 MAC address learned in routing instance PBB-EVPN-B-COMP bridge domain BR-B-100100

-

MAC address count per learn VLAN within routing instance:

-

Learn VLAN ID MAC count

-

999 3

-

3000 MAC address learned in routing instance PBB-EVPN-I-COMP bridge domain BR-I-100

-

MAC address count per interface within routing instance:

-

Logical interface MAC count

-

ge-1/0/0.100:100 1000

-

rbeb.32768 1000

-

rbeb.32769 1000

-

MAC address count per learn VLAN within routing instance:

-

Learn VLAN ID MAC count

-

100 3000

-

tim@MX5-1>

- Line 2 shows the B-Component and 3x B-MACs being learnt via the PBB-EVPN

- Line 6 shows the I-component and all 3000 mac-addresses live on the network, 1000 learnt locally via the directly connected interface, and 2000 learnt via RBEB.32768 and RBEB.32769 – the RBEB is the remote-backbone-edge-bridge – once the frames come in from the EVPN and the PBB headers are popped, the original C-MACs are learnt, which is why we see 3000 MACs in the I-Component locally, whilst we see only 3x B-MACs learnt remotely from the B-Component.

Lets look at the BGP table;

-

tim@MX5-1> show bgp summary

-

Groups: 1 Peers: 2 Down peers: 0

-

Table Tot Paths Act Paths Suppressed History Damp State Pending

-

bgp.evpn.0

-

4 4 0 0 0 0

-

Peer AS InPkt OutPkt OutQ Flaps Last Up/Dwn State|#Active/Received/Accepted/Damped…

-

10.10.10.2 100 1183 1177 0 0 8:50:22 Establ

-

bgp.evpn.0: 2/2/2/0

-

PBB-EVPN-B-COMP.evpn.0: 2/2/2/0

-

__default_evpn__.evpn.0: 0/0/0/0

-

10.10.10.3 100 1183 1179 0 0 8:50:18 Establ

-

bgp.evpn.0: 2/2/2/0

-

PBB-EVPN-B-COMP.evpn.0: 2/2/2/0

-

__default_evpn__.evpn.0: 0/0/0/0

-

tim@MX5-1> show route protocol bgp table PBB-EVPN-B-COMP.evpn.0

-

PBB-EVPN-B-COMP.evpn.0: 6 destinations, 6 routes (6 active, 0 holddown, 0 hidden)

-

+ = Active Route, – = Last Active, * = Both

-

2:1.1.1.1:100::100100::a8:d0:e5:5b:75:c8/304 MAC/IP

-

*[BGP/170] 08:25:43, localpref 100, from 10.10.10.2

-

AS path: I, validation-state: unverified

-

> to 192.169.100.11 via ge-1/1/0.0, Push 299904

-

2:1.1.1.1:100::100100::a8:d0:e5:5b:94:60/304 MAC/IP

-

*[BGP/170] 08:24:56, localpref 100, from 10.10.10.3

-

AS path: I, validation-state: unverified

-

> to 192.169.100.11 via ge-1/1/0.0, Push 299920

-

3:1.1.1.1:100::100100::10.10.10.2/304 IM

-

*[BGP/170] 08:25:47, localpref 100, from 10.10.10.2

-

AS path: I, validation-state: unverified

-

> to 192.169.100.11 via ge-1/1/0.0, Push 299904

-

3:1.1.1.1:100::100100::10.10.10.3/304 IM

-

*[BGP/170] 08:24:57, localpref 100, from 10.10.10.3

-

AS path: I, validation-state: unverified

-

> to 192.169.100.11 via ge-1/1/0.0, Push 299920

-

tim@MX5-1>

Here we can see quite quickly the savings made in memory, CPU and control-plane processing, we have a stretched layer-2 network with 3000 hosts, in regular EVPN by now we’d have 3000 EVPN MAC routes being advertised and received across the network despite only 3x sites being in play. Here with PBB-EVPN we only have 3x B-MACs in the BGP table – with 2x being learnt remotely (shown in purple on lines 18 and 22)

Technically we could have a million mac-addresses at each site – provided the switches could handle that many mac-addresses, we’d still only be advertising the 3x B-MACs across the core from the B-Component, so PBB-EVPN does provide massive scale – it’s true that the locally we’d still need to learn 1 million C-MACs, but the difference is we don’t need to advertise them all back and forth across the network – that state remains local and is represented by a B-MAC and I-SID for that specific customer or service.

We can take a look at the bridge mac-table to see the different mac-addresses in play, for both the B-Component and the I-Component;

-

tim@MX5-1> show bridge mac-table

-

MAC flags (S -static MAC, D -dynamic MAC, L -locally learned, C -Control MAC

-

O -OVSDB MAC, SE -Statistics enabled, NM -Non configured MAC, R -Remote PE MAC)

-

Routing instance : PBB-EVPN-B-COMP

-

Bridging domain : BR-B-100100, VLAN : 999

-

MAC MAC Logical NH RTR

-

addresssss flags interface Index ID

-

01:1e:83:01:87:04 DC 1048575 0

-

a8:d0:e5:5b:75:c8 DC 1048576 1048576

-

a8:d0:e5:5b:94:60 DC 1048578 1048578

-

MAC flags (S -static MAC, D -dynamic MAC,

-

SE -Statistics enabled, NM -Non configured MAC)

-

Routing instance : PBB-EVPN-I-COMP

-

Bridging domain : BR-I-100, ISID : 100100, VLAN : 100

-

MAC MAC Logical Remote

-

address flags interface BEB address

-

00:00:00:bc:25:2f D ge-1/0/0.100

-

00:00:00:bc:25:31 D ge-1/0/0.100

-

00:00:00:bc:25:33 D ge-1/0/0.100

-

00:00:00:bc:25:35 D ge-1/0/0.100

-

00:00:00:bc:25:37 D ge-1/0/0.100

-

00:00:00:bc:25:39 D ge-1/0/0.100

-

00:00:00:bc:25:3b D ge-1/0/0.100

-

00:00:00:bc:25:3d D ge-1/0/0.100

-

<omitted>

-

00:00:00:bc:2f:67 D rbeb.32768 a8:d0:e5:5b:75:c8

-

00:00:00:bc:2f:69 D rbeb.32768 a8:d0:e5:5b:75:c8

-

00:00:00:bc:2f:6b D rbeb.32768 a8:d0:e5:5b:75:c8

-

00:00:00:bc:2f:6d D rbeb.32768 a8:d0:e5:5b:75:c8

-

00:00:00:bc:2f:6f D rbeb.32768 a8:d0:e5:5b:75:c8

-

00:00:00:bc:2f:71 D rbeb.32768 a8:d0:e5:5b:75:c8

-

00:00:00:bc:2f:73 D rbeb.32768 a8:d0:e5:5b:75:c8

-

<omitted>

-

00:00:00:bc:3c:65 D rbeb.32769 a8:d0:e5:5b:94:60

-

00:00:00:bc:3c:67 D rbeb.32769 a8:d0:e5:5b:94:60

-

00:00:00:bc:3c:69 D rbeb.32769 a8:d0:e5:5b:94:60

-

00:00:00:bc:3c:6b D rbeb.32769 a8:d0:e5:5b:94:60

-

00:00:00:bc:3c:6d D rbeb.32769 a8:d0:e5:5b:94:60

-

00:00:00:bc:3c:6f D rbeb.32769 a8:d0:e5:5b:94:60

-

00:00:00:bc:3c:71 D rbeb.32769 a8:d0:e5:5b:94:60

Because there are 3000x MAC addresses currently on the network I’ve omitted both of them so you can see the important differences;

- Lines 10 through 12, show the B-MACs with line 10 showing the local B-MAC for MX-1, and lines 11 and 12 showing the B-MACs learnt from MX-2 and MX-3 via BGP (we can see these in the BGP routing table on lines 18 and 22 from the previous example)

- Lines 21 through 28 give a small sample of the locally learnt mac-addresses connected to ge-1/0/0.100 in the I-Component

- Lines 32 through 38 give a small sample of the remotely learnt mac-addresses from MX-2’s B-MAC (a8:d0:e5:5b:75:c8)

- Lines 42 through 48 give a small sample of the remotely learnt mac-addresses from MX-3’s B-MAC (a8:d0:e5:5b:94:60)

- Essentially – the EVPN control-plane is only present for B-MACs, whilst the C-MAC forwarding is handled in the same way as VPLS on the forwarding plane, the big advantage is that all this information isn’t thrown into BGP – it’s kept locally.

Finally, with traffic running – I have the connection between MX-1 and P1 tapped so I can capture packets into wireshark at line-rate, lets look at a packet in the middle of the network to see what it looks like;

We can see the MPLS lables (2x labels, one for the IGP transport and one for the EVPN) below that we have our backbone Ethernet header with source and destination B-MAC (802.1ah provider backbone bridge) below that we have our 802.1ah PBB I-SID field with the customer’s original C-MAC, and last we have the original dot1q frame, (single-tagged in this case)

So that’s pretty much it – as far as the basics of PBB-EVPNs are concerned, a few basic points;

- PBB-EVPNs are considerably more complicated to configure than regular EVPN, however if you need massive scale and the ability to handle hundreds of thousands or millions of mac-addresses on the network – it’s currently one of the best technologies to look at

- Unfortunately PBB-EVPN is pretty much layer-2 only, most of the fancy layer-3 hooks build into regular EVPN which I demonstrated in previous blog posts aren’t supported for PBB-EVPN, it is essentially a layer-2 solution

- PBB-EVPN does support layer-2 multi-homing which I might look into with a later blog-post

I hope anyone reading this found it useful 🙂

Hi, great post and such detailed information on the why, impressed!

Question with the hardware being a TRIO chipset and I need to look further into it but would it be too much to ask to get this working on vMX which have standard virtual PICS running v16.1

LikeLike

Hi – Thanks for the kind comments 🙂 The honest answer is “I don’t know” I do know that regular EVPN works on VMX, but I haven’t tried PBB Encapsulation, there were some restrictions around PBB on some Juniper cards previously, my best advice is to just try it!

LikeLike

It’s not going to work on a 16.x

LikeLike

Hi, I’ve just discovered this blog while searching for EVPN-MH stuff.

Well, thanks a lot for taking the time to write these things down, and in such a clear way.

Great resource!

LikeLike

Thanks for the kind comments! 🙂

LikeLike

Great post! I spent endless hours with PBB/EVPN on Junos. This blog gives a very good explanation on why and how!

LikeLike

I need to say: this post was terrific. I started to study L2 VPN some days ago and I was not understanding the difference and limitations. Regarding EVPN and PBB-EVPN I understood perfectly because you’re so clear and didatic. Thank you very much!

LikeLike

Thanks for the kind comments! glad you found it useful 🙂

LikeLiked by 1 person

Incredibly clear post on a complicated topic, thank you, wish more networking material was written in this style!

LikeLiked by 1 person

Nicely explained 🙂

LikeLiked by 1 person

Good Post !! well done

you’ve explained on EVPN-PBB but what is the difference between that and EVPN-VLXLAN?

What would be the benefits over one and the other.

What would be good to cover is a new section on . l2 and l3 DC-DC connectivity over a DCI fabric design , and how each DC breakout into a regular layer 3 VPN VRF for remote CEs requiring access to the DC domain.

Cheers

LikeLike

….

LikeLike